Running AI locally could be a big selling point for powerful PC hardware. But the PC industry isn’t making a compelling case.

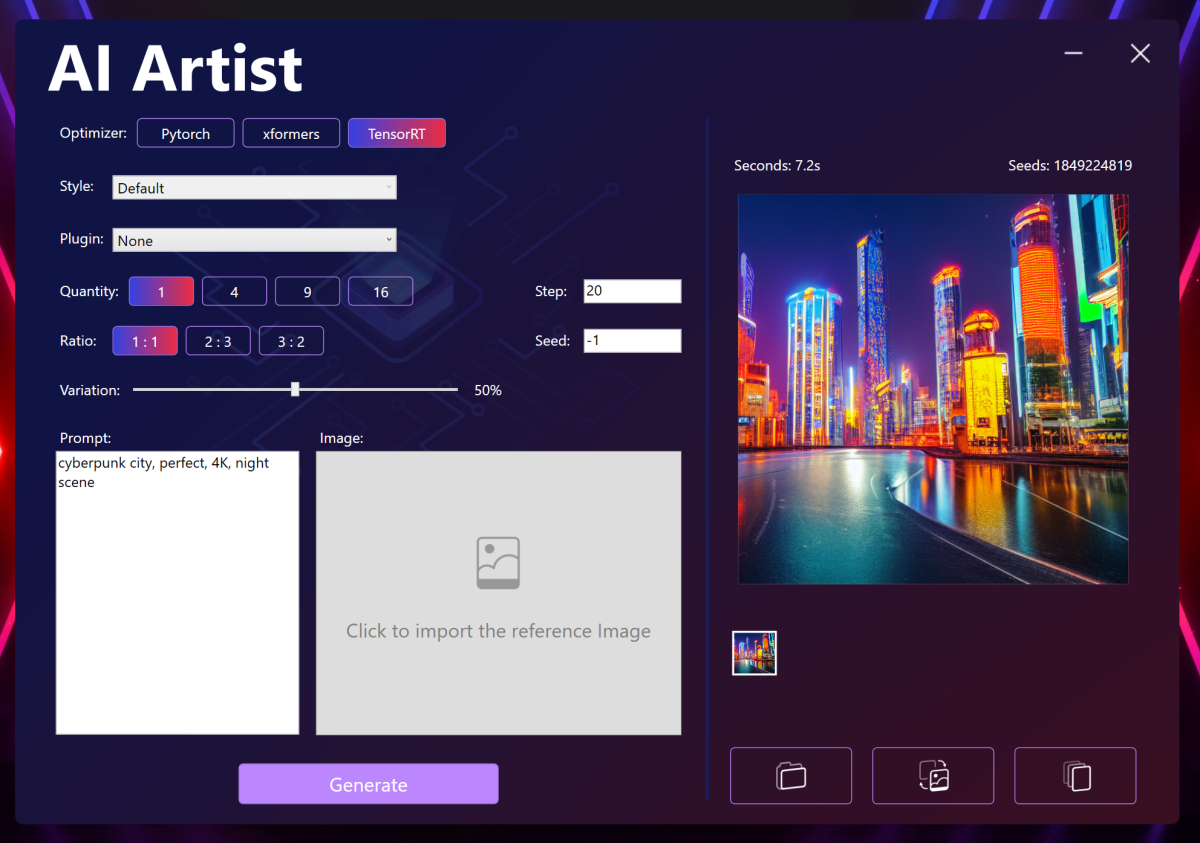

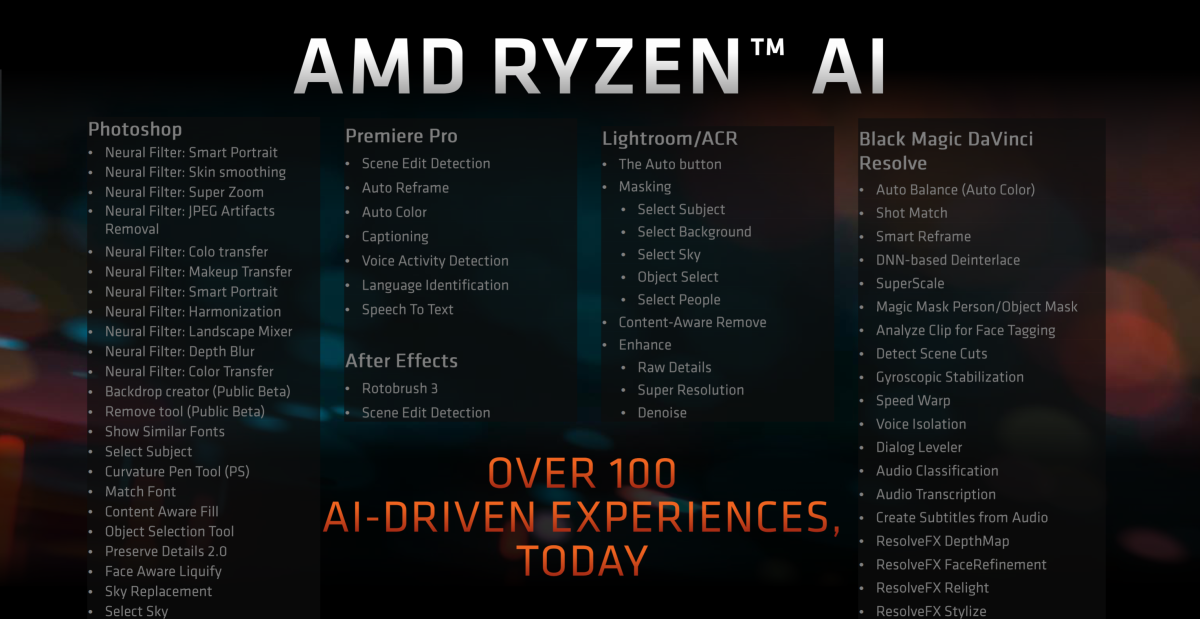

It’s not enough to champion AI hardware that supports local large language models, generative AI, and the like. Hardware vendors need to step up and serve as a middleman — if not an outright developer — for those local AI apps, too.

Qualcomm almost has it. At MWC 2024 (formerly known as Mobile World Congress, aka one of the world’s largest mobile trade shows), the company this week announced a Qualcomm AI Hub, a repository of more than 75 AI models specifically optimized for Qualcomm and Snapdragon platforms. Qualcomm also showed off a seven-billion-parameter local LLM, running on a (presumably Snapdragon-powered) PC, that can accept audio inputs. Finally, Qualcomm demonstrated an additional seven-billion-parameter LLM running on Snapdragon phones.