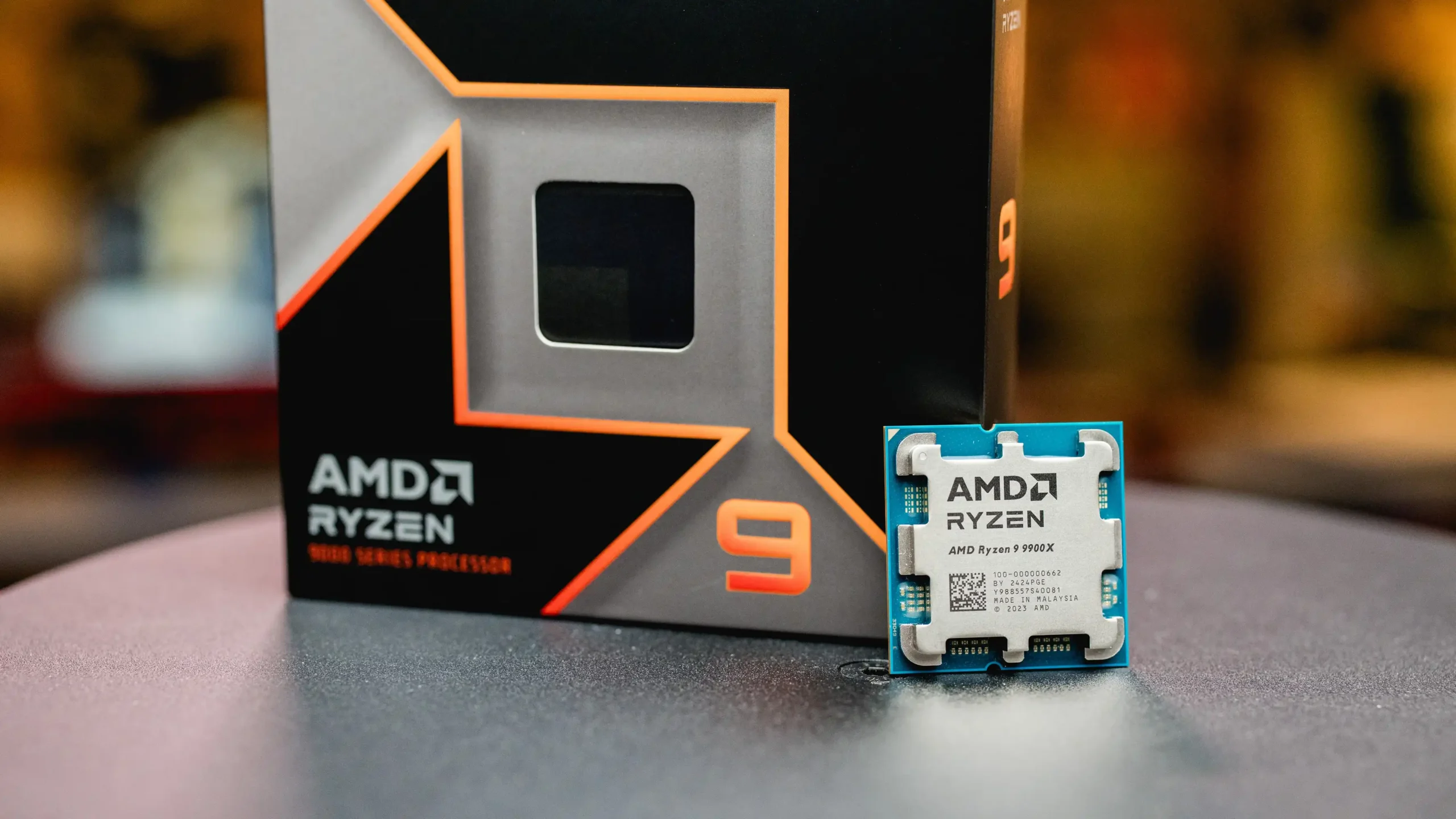

AMD’s highly anticipated Ryzen 9000 desktop processors launched earlier this month, but the gaming performance improvements seen by independent reviewers didn’t quite live up to the hype generated by AMD’s early marketing claims. So, what went wrong?

It turns out that a mix of factors led to the discrepancy. After extensive analysis by both reviewers and AMD, the company released a community post last night that shed light on the issue. The performance gap boils down to several variables: differences in the Windows mode used during testing, the VBS security settings, how competing Intel systems were configured, and the specific games selected for benchmarking.

David McAfee, who leads AMD’s client channel segment, joined us on a special edition of The Full Nerd to clarify the situation. According to AMD, one key issue was the difference in how AMD conducted its tests compared to independent reviewers. AMD’s internal tests were run in “Super Admin” mode, which reflected certain branch prediction optimizations in the “Zen 5” architecture that weren’t present in the Windows versions reviewers used.

As McAfee explained, AMD’s automated testing framework, which operates in Super Admin mode, was established long ago when there was little difference between that mode and standard user mode. However, over time, that difference became significant, creating a blind spot for AMD. The company has since adjusted its data collection methods to align more closely with how reviewers and gamers use their systems.

The blog post also highlighted how game selection and even the specific scenes within games can influence benchmark results. McAfee emphasized that the balance of CPU and GPU demands in different game sections can lead to substantial variations in relative performance between competing products.

One important takeaway from McAfee’s interview is that AMD isn’t blaming reviewers for the discrepancies. Instead, AMD acknowledges that differences in testing methods and system configurations were the root cause. The interview provides deeper insights into the situation and underscores the importance of understanding how testing methodologies can impact results.